TaRL in Karnataka

Background

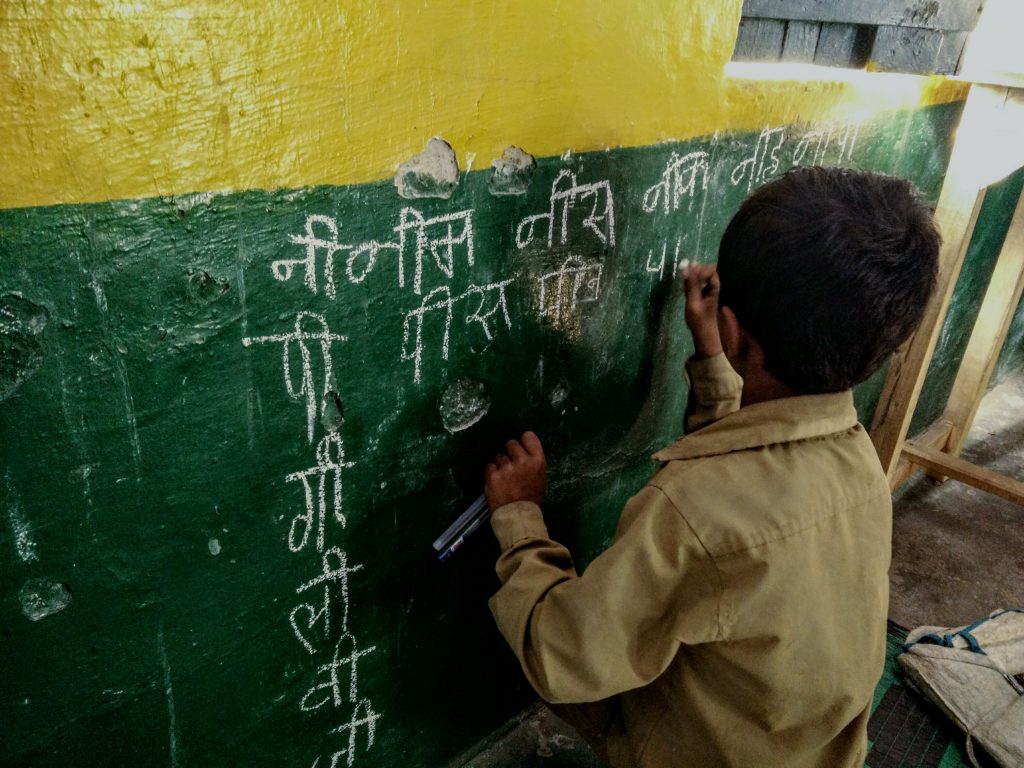

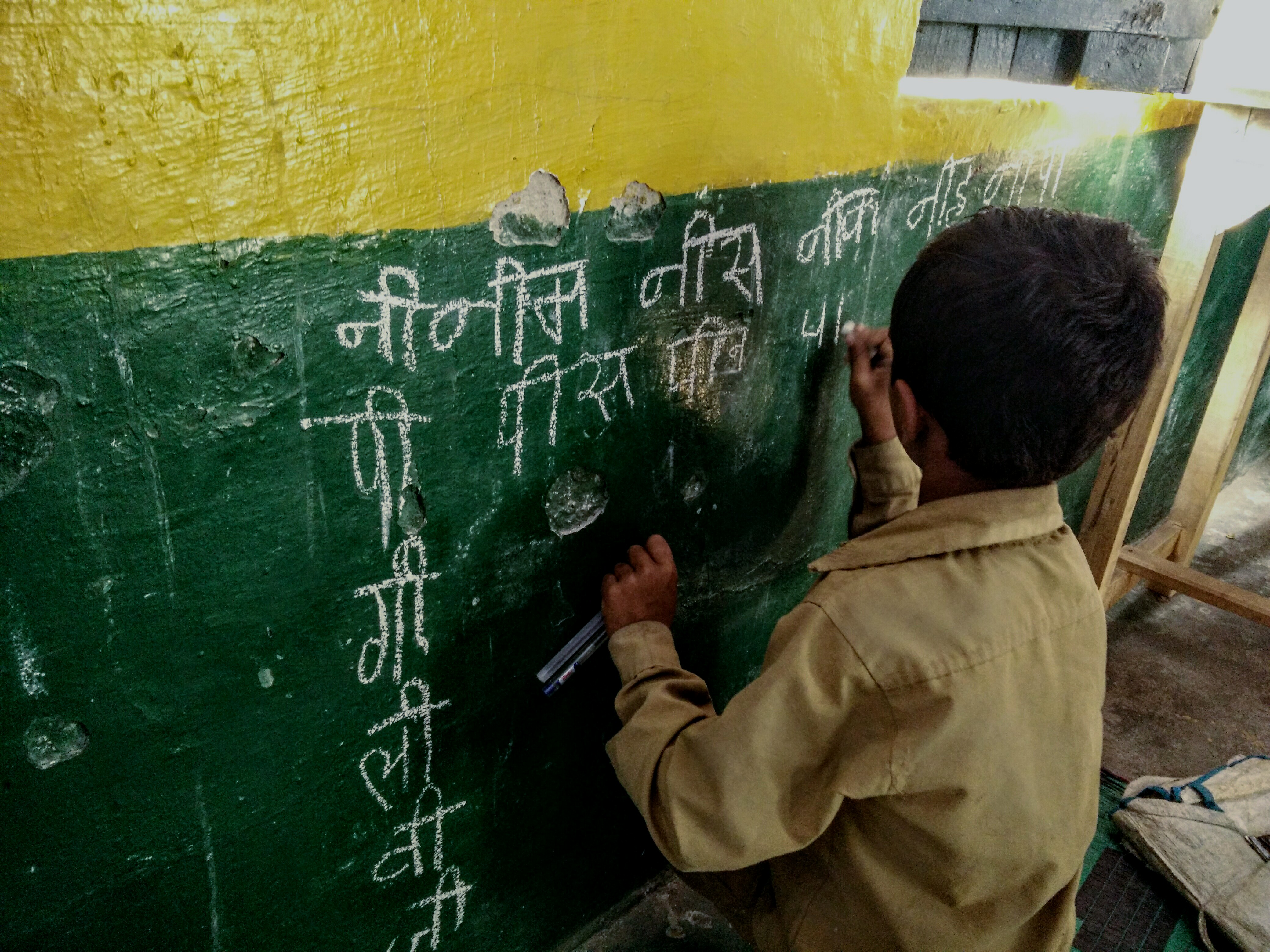

Karnataka is a state in the southern part of India. ‘Odu Karnataka’ (which means Read Karnataka in the local language), was initiated in the year 2016-17 by the government of Karnataka in partnership with Pratham Education Foundation as a learning improvement program to address the needs of children in grades 4 and 5 in government schools.

In 2016-17, Odu Karnataka was implemented as a pilot in all primary government schools in three districts of Karnataka with the aim of improving basic reading and math skills of more than 70,000 students.

Based on the experiences of 2016-17, the government decided to scale up Odu Karnataka to 13 districts in 2017-18. A Memorandum of Understanding was signed between the DSERT (Department of State Educational Research and Training) and Pratham for implementing the program for the next three years to ensure sustainability of learning gains and teaching-learning approach. In 2017-18, the Odu Karnataka program was implemented in over 17,000 schools reaching more than 470,000 children in 13 districts of Karnataka.

This case study highlights the processes and systems that were set up to continuously assess, review, learn and course-correct while implementing the program.

Team Structure

Click on the button below to see an explanation of the Karnataka government’s monitoring structure and their roles and responsibilities for the table.

Pratham also deployed a team to support the program. At the state level a senior Pratham personnel was responsible for overall coordination with DSERT in consultation with the state team members – 3-4 persons responsible for training support, content creation and monitoring; and 1 measurement and monitoring associate responsible for coordinating data collection and analysis. In every district there were 4-5 district in-charges (which got reduced to 1-3 in 2017-18) who coordinated with the government officials at the district level and below to support and plan the activities in their district.

Two key pieces of information were collected:

1.) Information from schools about children’s learning levels and attendance

School teachers recorded children’s attendance and assessment data. CRPs aggregated data from the schools. Block level data entry personnel collected these sheets from all CRPs in the block and entered data onto a data entry portal created by Pratham. District officials tracked data entry status on the portal and followed up with blocks to ensure timely data entry. Pratham created dynamic data visualizations, which were available to everyone on a website to facilitate timely decision-making.

2.) Information from mentors about TaRL classroom visits

CRPs recorded their classroom observations in a sheet. These sheets remained with them to facilitate discussions during the review meetings at the block level. Even at higher levels, the focus was on discussing the observations in review meetings. The observation data was not entered.

The forms and processes are explained in detail below.

Information From Schools

The following processes were followed to get the data quickly collected, entered, analysed and reported. People were thoroughly trained on these forms, systems and processes

a) Teachers used the Learning Progress Sheetto record child-wise assessment and attendance data. The sheet was designed such that teachers could fill it easily. After completing the one-to-one assessments, teachers aggregated the data, which gave them a clear picture of the learning levels of their class.

b) CRPs visited all schools within 3-4 days after the assessments to consolidate the data in the Cluster Consolidation Sheet. Note that the data was recorded grade-wise for every school. This sheet provided a summary of all schools a CRP was responsible for.

To increase engagement with data, the CRPs were also provided with a sheet to visualize the assessment data and to prioritize and plan their support to schools.

c) The Cluster Consolidation Sheetswere submitted at the government block offices for data entry. Pratham designed an online data entry portal with separate login accounts for every block. The portal was simple to use and had strict data validations to ensure accurate data entry. Everyone involved in the program could track the status of data entry in real time. District officials followed-up with blocks where data entry was lagging and ensured completion of data entry in the stipulated time.

d) An online dynamic dashboard, which was available to all on a website, was created to showcase data in an easy-to-understand manner. The dynamic dashboards allowed people to see the data that was relevant to them and compare their location’s data with other locations.

Information From Mentors

The main cadre responsible for regular school visits and mentoring of teachers was the cluster-level cadre of CRPs. Every CRP had 10-15 schools under their purview. It was critical that the CRPs conduct regular visits to schools to ensure that the program was being implemented as per plan, provide feedback to teachers, review the program periodically and take course-corrective measures. To prepare the CRPs for this crucial role, it was important that the CRPs understand the TaRL approach well. After the training, all CRPs conducted practice classes for 15-20 days. Having used the materials and methods themselves and seen for themselves how children’s learning levels improved, these CRPs were much better able to train and guide the teachers in their charge.

The following measures were taken to set up a robust monitoring and review structure:

- Members of the block monitoring team were linked with specific CRPs to ensure that members of the block monitoring team also had accountability for specific CRPs and their clusters. Every district nodal officer was also assigned a specific block.

- Ideally, a CRP was asked to make at least 5 visits to each school under his or her charge. At each stage, the CRP provided support and guidance to teachers. Of the five visits, the first visit was made in the first two weeks at the beginning of the intervention, the second was between the baseline and the midline, the third was scheduled immediately after the midline, the fourth visit was between the midline and endline and the last visit was made a few days before the endline. Note that this was a guideline, the CRPs had the freedom to make more visits in the schools that were struggling to make progress. In fact, based on the assessment data, each CRP identified 5 “least performing schools” to provide more support to.

- Once in a class, every CRP first observed the classroom activities, interacted with children and then demonstrated effective learning activities. They used a School Observation Sheet to make note of important observations. The sheet also acted like a checklist to remind the CRPs about the various things they should be observing –

- Attendance: overall and of weakest

- Assessment data use and understanding

- Grouping: appropriate and dynamic

- Materials: available and being used

- Activities: appropriate and participatory

- Progress: in reading and math

- Challenges: faced by teacher

- Review meetings were scheduled twice a month at the block level and once a month at the district level. Nodal officers or BRLs led the meetings at the block level, and the DIET Principals led the district level meetings. The focus of these meetings was on discussing the observations made by mentors and activities undertaken by them in the class to support and improve the situation. The Pratham team members at the district level used to be present in these meetings to facilitate the discussions. The group also worked together to plan guidelines for subsequent visits in such meetings.

- Review at the state level happened after every assessment cycle to compare progress across locations and discuss field-level challenges and strategies to overcome them. Participants in these meetings were government and Pratham state-level personnel and DIET Principals.

Build mentors’ capacity to support teachers The district teams and CRPs conducting practices classes before the implementation and, helped solve major instructional and logistical challenges prior to the roll-out of the program. These personal observations and reflections led to a stronger belief in the activities and that learning levels can be improved within a 60-day program. Even after the implementation had started, Pratham staff regularly visited schools with the mentors and participated in review meetings to strengthen their mentoring. Buy-in of the mentors to collect data Data collection is often considered as an additional burden, especially by people involved in teaching-learning. But by keeping the data collection processes simple and by making people realize the importance of data, this challenge was overcome to a large extent. Firstly, the number of indicators collected was cut down to only the most useful ones. The forms, portals and dashboards were made such that they were simple to fill and use. And most importantly, insights from the data were made available to the implementers on time. Build people’s capacity to collect, understand and use data During the training at various levels, separate sessions were held to explain all forms and processes related to data collection. Moreover, during review meetings, some time was set aside to discuss the results from the dashboards. Pratham members supported the government officials’ capacity-building with regard to understanding and using data. Technology should suit the ground realities There were delays in data entry of a few locations because of the inaccessibility of computers or network issues. Therefore, it was realized that the data-entry portals, dashboards and reports also needed to be designed in a mobile-friendly manner to increase penetration. Also, it was not necessary to have a common data collection strategy for all locations. Depending on the field situation in various areas, multiple strategies could be chalked out. Keep the focus on action Clear action steps based on data were suggested to the mentors. For example, CRPs were asked to identify five “least performing” schools in their clusters after every round of assessments. The purpose was not to report this information and send to the senior officials, but rather to enable the CRPs to probe into the reasons behind certain schools not making enough progress. This action-oriented outlook towards data was influential in driving change in children’s learning outcomes.